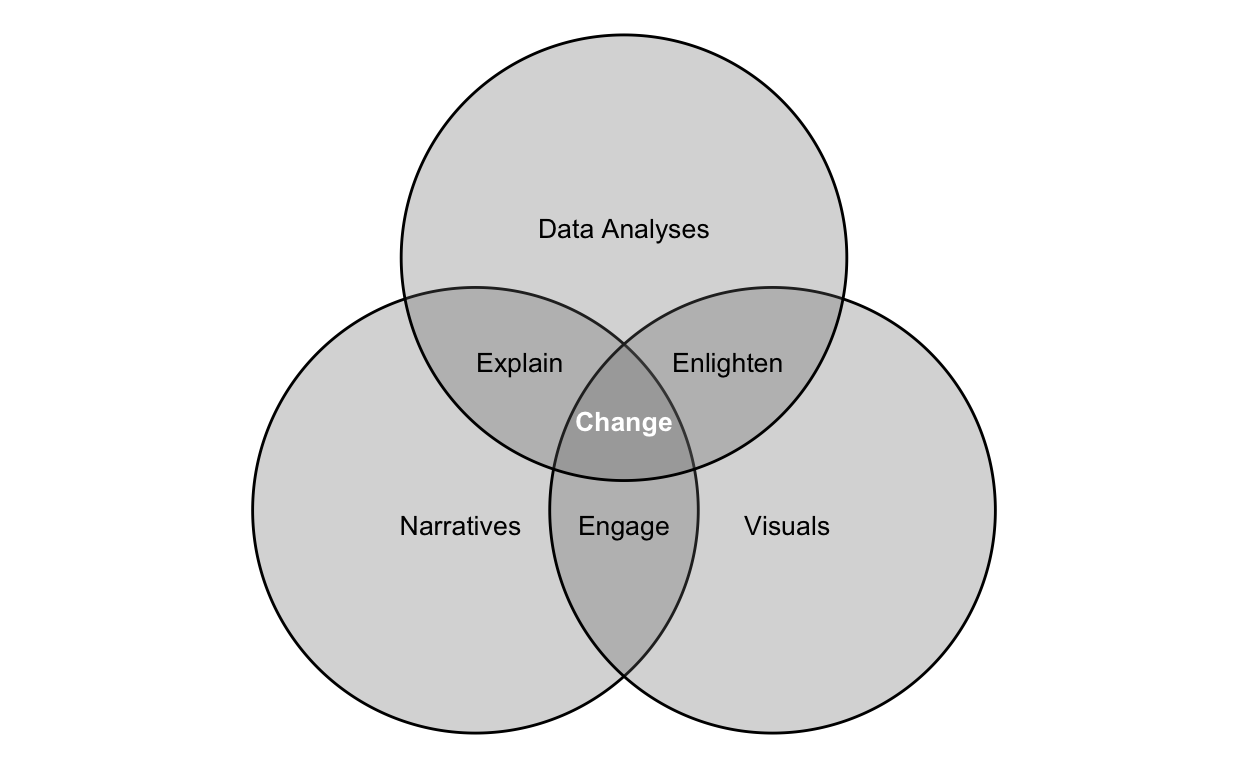

Narrative and story can enhance others’ understanding of data, offering meaning and insights as communicated in numbers and words, or in graphical encodings. Carefully combined, narratives, data analyses, and visuals can help enable change:

Empirical studies suggest the communication is generally more effective when its author controls all aspects of the communication, from content to typography and form. But in some cases, we may enhance the communication by allowing our audience to choose among potential contexts of the information. Here, we aim to explore many ideas within this framework, using as content a data analytics project. We will start by exploring our content (a proposed and implemented data analytics project), our intended audiences (both executives, more general, and mixed audiences), through narrative and considering not just our words but the typographic forms of those words in the chosen communication medium. Then we begin to integrate other, visual, forms of data representation into the narrative. As our discussions in graphical data encodings become more complex, we give them focus in the form of information graphics, dashboards, and finally enable our audience as, to some degree, co-author with interactive design of the communication.

The readers who will get most from this text, for whom I have in mind as my audience, are curious active learners:

An active learner asks questions, considers alternatives, questions assumptions, and even questions the trustworthiness of the author or speaker. An active learner tries to generalize specific examples, and devise specific examples for generalities.

An active learner doesn’t passively sponge up information — that doesn’t work! — but uses the readings and lecturer’s argument as a springboard for critical thought and deep understanding.

This text isn’t meant to be an end, but a beginning, giving you hand-selected, seminal and cutting-edge references for the concepts presented. Go down these rabbit holes, following citations and studying the cited material. Becoming an expert in storytelling with data also requires practicing. Indeed,

Learners need to practice, to imitate well, to be highly motivated, and to have the ability to see likenesses between dissimilar things in [domains ranging from creative writing to mathematics] (Gaut 2014).

You may find some concepts difficult or vague on a first read. For that, I’ll offer encouragement from Abelson (1995),

I have tried to make the presentation accessible and clear, but some readers may find a few sections cryptic …. Use your judgment on what to skim. If you don’t follow the occassional formulas, read the words. If you don’t understand the words, follow the music and come back to the words later.

Let’s dive in!

1 Narrative

1.1 Analytics communication scopes

1.1.1 Scopes

One place the general scope of analytics projects arise is within science (in which data science exists) and proposal writing. Let’s first just consider basic informational categories (in typical, but generic structure or order) that generally form a research proposal — see (Friedland, Folt, and Mercer 2018), (Oster and Cordo 2015), (Schimel 2012), (Oruc 2011), and (Foundation 1998), keeping in mind that the particular information and ordering explicitly depend on our intended audience:

I. Title

II. Abstract

III. Project description

A. Results from prior work

B. Problem statement and significance

C. Introduction and background

1. Relevant literature review

2. Preliminary data

3. Conceptual, empirical, or theoretical model

4. Justification of approach or novel methods

D. Research plan

1. Overview of research design

2. Objectives or specific aims, hypotheses, and methods

3. Analysis and expected results

4. Timetable

E. Broader impacts

IV. References cited

V. Budget and budget justificationWhile these sections are generically labelled and ordered, each should be specific to an actual proposal. Let’s consider a few sections. The title, for example, should accurately reflect the content and scope of the overall proposal. The abstract frames the goals and scope of the study, briefly describes the methods, and presents the hypotheses and expected results or outputs. It also sets up proper expectations, so be careful to avoid misleading readers into thinking that the proposal addresses anything other than the actual research topic. Try for no more than two short paragraphs.

Within the project description, the problem statement and significance typically begin with the overall scope and then funnels the reader through the hypotheses to the goals or specific aims of the research.

The literature review sets the stage for the proposal by discussing the most widely accepted or influential papers on the research. The key here is to provide context and be able to show where the proposed work would extend what has been done or how it fills a gap or resolves uncertainty, etcetera1. We will discuss this in detail later.

Preliminary data can help establish credibility, likely success, or novelty of the proposal. But we should avoid overstating the implications of the data or suggesting we’ve already solved the problem.

In the research plan, the goal is to keep the audience focused on the overall significance, objectives, specific aims, and hypotheses while providing important methodological, technological, and analytical details. It contains the details of the implementation, analysis, and inferences of the study. Our job is typically to convince our audience that the project can be accomplished.

Objectives refer to broad, scientifically far-reaching aspects of a study, while hypotheses refer to a more specific set of testable conjectures. Specific aims focus on a particular question or hypothesis and the methods needed and outputs expected to fulfill the aims. Of note, these objectives will typically have already been (briefly) introduced earlier, for example, in the abstract. Later sections add relevant detail.

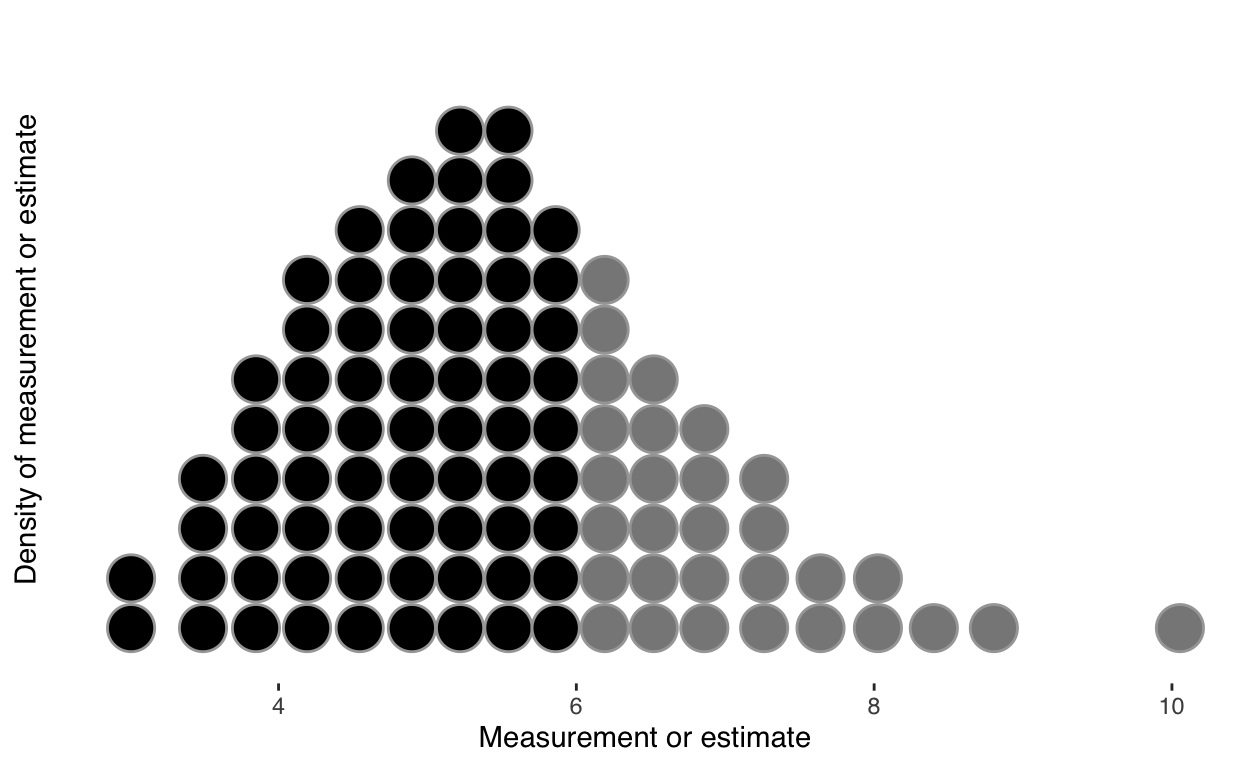

If early data are available, show how you will analyze them to reach your objectives or test your hypotheses, and discuss the scope of results you might eventually expect. If such data are unavailable, consider culling data from the literature to show how you expect the results to turn out and to show how you will analyze your data when they are available. Complete a table or diagram, or run statistical tests using the preliminary or “synthesized” data. This can be a good way to show how you would interpret the results of such data.

From studying these generic proposal categories, we get a rough sense of what we, and our audiences, may find helpful in understanding an analytics project, results, and implications: the content we communicate. Let’s now focus on analytics projects in more detail.

1.1.1.1 Data measures in analytics projects

Data analytics projects, of course, require data. What, then, are data? Let’s consider what Kelleher and Tierney (2018) has to say in their aptly titled chapter, What are data, and what is a data set? Consider their definitions:

datum : an abstraction of a real-world entity (person, object, or event). The terms variable, feature, and attribute are often used interchangeably to denote an individual abstraction.

Data are the plural of datum. And:

data set : consists of the data relating to a collection of entities, with each entity described in terms of a set of attributes. In its most basic form, a data set is organized in an \(n \cdot m\) data matrix called the analytics record, where \(n\) is the number of entities (rows) and \(m\) is the number of attributes (columns).

Data may be of different types, including nominal, ordinal, and numeric. These have sub-types as well. Nominal types are names for categories, classes, or states of things. Ordinal types are similar to nominal types, except that it is possible to rank or order categories of an ordinal type. Numeric types are measurable quantities we can represent using integer or real values. Numeric types can be measured on an interval scale or a ratio scale. The data attribute type is important as it affects our choice of analyses and visualizations.

Data can also be structured (like a table) or unstructured (more like the words in this document). And data may be in a raw form such as an original count or measurement, or it may be derived, such as an average of multiple measurements, or a functional transformation. Normally, the real value of a data analytics project is in using statistics or modelling “to derive one or more attributes that provide insight into a problem” (Kelleher and Tierney 2018).

Finally, existing data originally for one purpose may be used in an observational study, or we may conduct controlled experiments to generate data.

But it’s important to understand, on another level what data represents. Lupi (2016) offers an interesting and helpful take,

Data represents real life. It is a snapshot of the world in the same way that a picture catches a small moment in time. Numbers are always placeholders for something else, a way to capture a point of view — but sometimes this can get lost.

1.1.1.2 Understanding data requires context

Data measurements never reveal all aspects relevant to their generation or impact upon our analysis (Loukissas 2019). Loukissas (2019) provides several interesting examples where local information that generated the data matter greatly in whether we can fully understand the recorded, or measured data. His examples include plant data in an arboretum, artifact data in a museum, collection data at a library, information in the news as data, and real estate data. Using these examples, he convincingly argues we need to shift our thinking from data sets to data settings.

Let’s consider another example, from baseball. In the game, a batter that hits the pitched ball over the outfield fence between the foul poles scores for his team — he hits a home run. But a batter’s home run count in a season does not tell us the whole story of their ability to hit home runs. Let’s consider some of the context in which a home run occurs. Batters hit a home run pitched by a specific pitcher, in a specific stadium, in specific weather conditions. All of these circumstances (and more) contribute to the existence of a home run event, but that context isn’t typically considered. Sometimes partly, rarely completely.

Perhaps obviously, all pitchers have different abilities to pitch a ball in a way that affects a batter’s ability to hit the ball. Let’s leave that aside for the moment, and consider more concrete context.

In Major League Baseball there are 30 teams, each with its own stadium. But each stadium’s playing field is differently sized than the others, each stadium’s outfield fence has uneven heights, and is different from other stadium fences! To explore this context, in figure 1, hover your cursor over a particular field or fence to link them together and compare with others.

Figure 1: We cannot understand the outcome of a batter’s hit without understanding its context, including the distances and heights of each stadium’s outfield fences.

You can further explore this context in an award-winning, animated visualization (Vickars 2019, winner Kantar Information is Beautiful Awards 2019). Further, the trajectory of a hit baseball depends heavily on characteristics of the air, including density, wind speed, and direction (Adair 2017). The ball will not travel as far in cold, humid, dense air. And density depends on temperature, altitude, and humidity. Some stadiums have a roof with conditioned air protected somewhat from weather. But most are exposed. Thus, we would learn more about the qualities of a particular batter’s home run if understood in the context of these data.

Other aspects of this game are equally context-dependent. Consider each recorded ball or strike, an event made by the umpire when the batter does not swing at the ball. The umpire’s call is intended to describe location of the ball as it crosses home plate. But errors exist in that measurement. It depends on human perception, for one. We have independent measurements by a radar system (as of 2008). But that too has measurement error we can’t ignore. Firstly, there are 30 separate radar systems, one for each stadium. Secondly, those systems require periodic calibration. And calibration requires, again, human intervention. Moreover, the original radar systems installed in these stadiums in 2007 are no longer used. Different systems have been installed and used in their place. Thus, to fully understand the historical location of each pitched baseball and outcome means we must research and investigate these systems.

So when we really want to understand an event and compare among events (comparison is crucial for meaning), context matters. We’ve seen this in the baseball example, and in Loukissas’s several fascinating case study examples with many types of data. When we communicate about data, we should consider context, data settings.

1.1.1.3 Project scope

More on scope. On a high-level, it involves an iterative progression of the identification and understanding of decisions, goals and actions, methods of analysis, and data.

Figure 2: Analytic components of a general statistical workflow, adapted from Pu and Kay (2018).

The framework of identifying goals and actions, and following with information and techniques gives us a structure not unlike having the outline of a story, beginning with why we are working on a problem and ending with how we expect to solve it. Just as stories sometimes evolve when retold, our ideas and structure of the problem may shift as we progress on the project. But like the well-posed story, once we have a well-scoped project, we should be able to discuss or write about its arc — purpose, problem, analysis and solution — in relevant detail specific to our audience.

Specificity in framing and answering basic questions is important: What problem is to be solved? Is it important? Does it have impact? Do data play a role in solving the problem? Are the right data available? Is the organization ready to tackle the problem and take actions from insights? These are the initial questions of a data analytics project. Project successes inevitably depend on our specificity of answers. Be specific.

1.1.1.4 Defining goals, actions, and problems

Identifying a specific problem is the first step in any project. And a well-defined problem illuminates its importance and impact. The problem should be solvable with identified resources. If the problem seems unsolvable, try focusing on one or more aspects of the problem. Think in terms of goals, actions, data, and analysis. Our objective is to take the outcome we want to achieve and turn it into a measurable and optimizable goal.

Consider what actions can be taken to achieve the identified goal. Such actions usually need to be specific. A well-specified project ideally has a set of actions that the organization is taking — or can take — that can now be better informed through data science. While improving on existing actions is a good general starting point in defining a project, the scope does not need to be so limited. New actions may be defined too. Conversely, if the problem stated and anticipated analyses does not inform an action, it is usually not helpful in achieving organizational goals. To optimize our goal, we need to define the expected utility of each possible action.

1.1.1.5 Researching and using what is known

The general point of data analyses is to add to the conversation of what is understood. An answer, then, requires research: what’s understood? In considering how to begin, we get help from J. Harris (2017) in another context, making interesting use of texts in writing essays. We need to “situate our [data analyses] about … an issue in relation to what others have written about it.” That’s the real point of the above “literature review” that funding agencies expect, and it’s generally the place to start our work.

…

Searching for what is known involves both the “literature” on whatever issue we’re interested in, and any related data.

1.1.1.6 Identifying accessible data

Do data play a role in solving the problem? Before a project can move forward, data must be both accessible and relevant to the problem. Consider what variables each data source contributes. While some data are publicly available, other data are privately owned and permission becomes a prerequisite. And to the extent data are unavailable, we may need to setup experiments to generate it ourselves. To be sure, obtaining the right data is usually a top challenge: sometimes the variable is unmeasured or not recorded.

In cataloging the data, be specific. Identify where data are stored and in what form. Are data recorded on paper or electronically, such as in a database or on a website? Are the data structured — such as a CSV file — or unstructured, like comments on a twitter feed? Provenance is important (Moreau et al. 2008): how were the data recorded? By a human or by an instrument?

What quality are the data (Fan 2015)? Measurement error? Are observations missing? How frequently is it collected? Is it available historically, or only in real-time? Do the data have documentation describing what it represents? These are but a few questions whose answers may impact your project or approach. By extension, it affects what and how you communicate.

1.1.1.7 Identifying analyses and tools

Once data are collected, the workflow needed to bridge the gap between raw data and actions typically involves an iterative process of conducting both exploratory and confirmatory analysis (Pu and Kay 2018), see Figure 2, which employs visualization, transformation, modeling, and testing. The techniques potentially available for each of these activities may well be infinite, and each deserves a course of study in itself. Wongsuphasawat, Liu, and Heer (2019), as their title suggests, review common goals, processes, and challenges of exploratory data analysis.

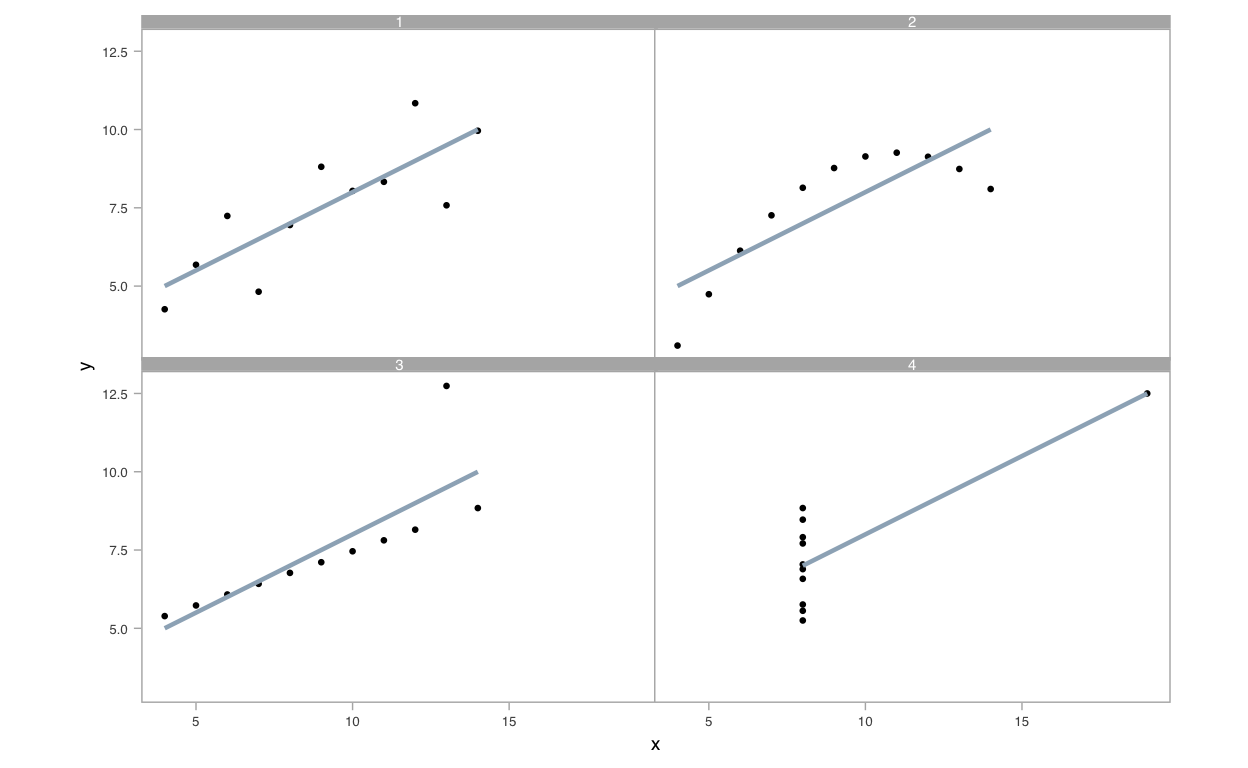

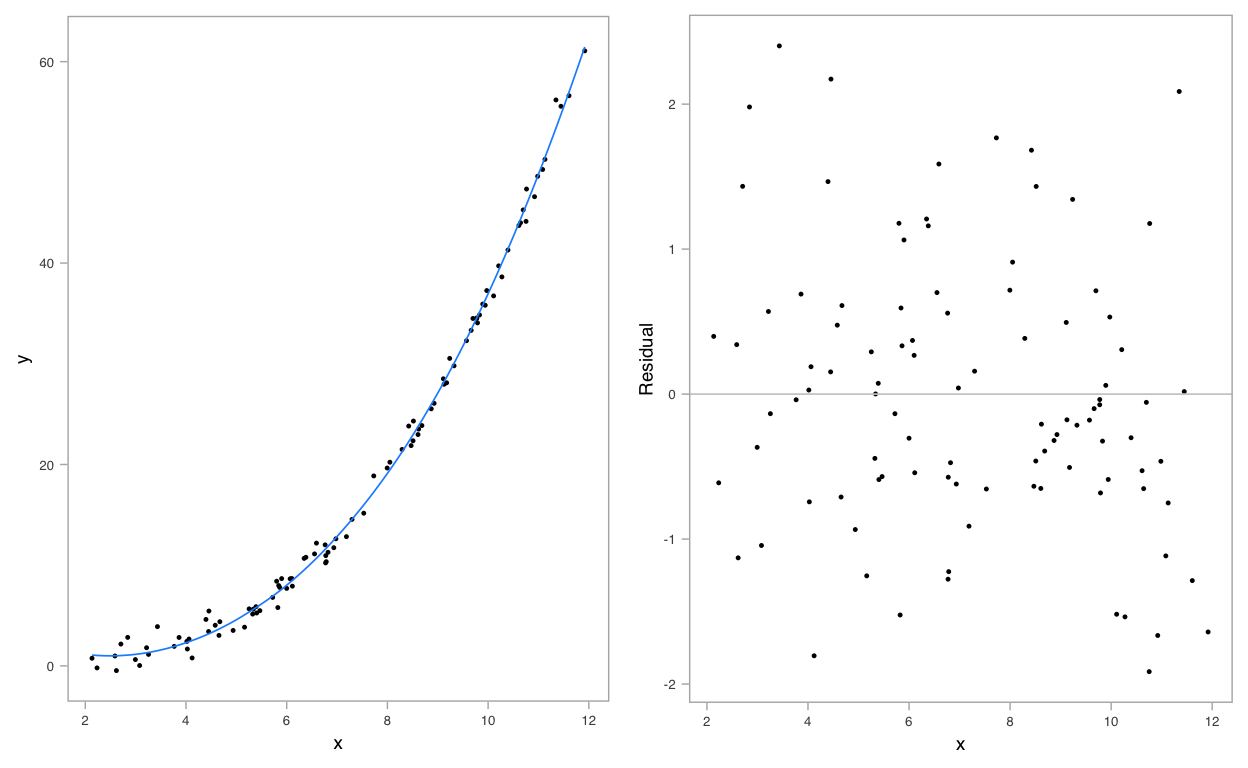

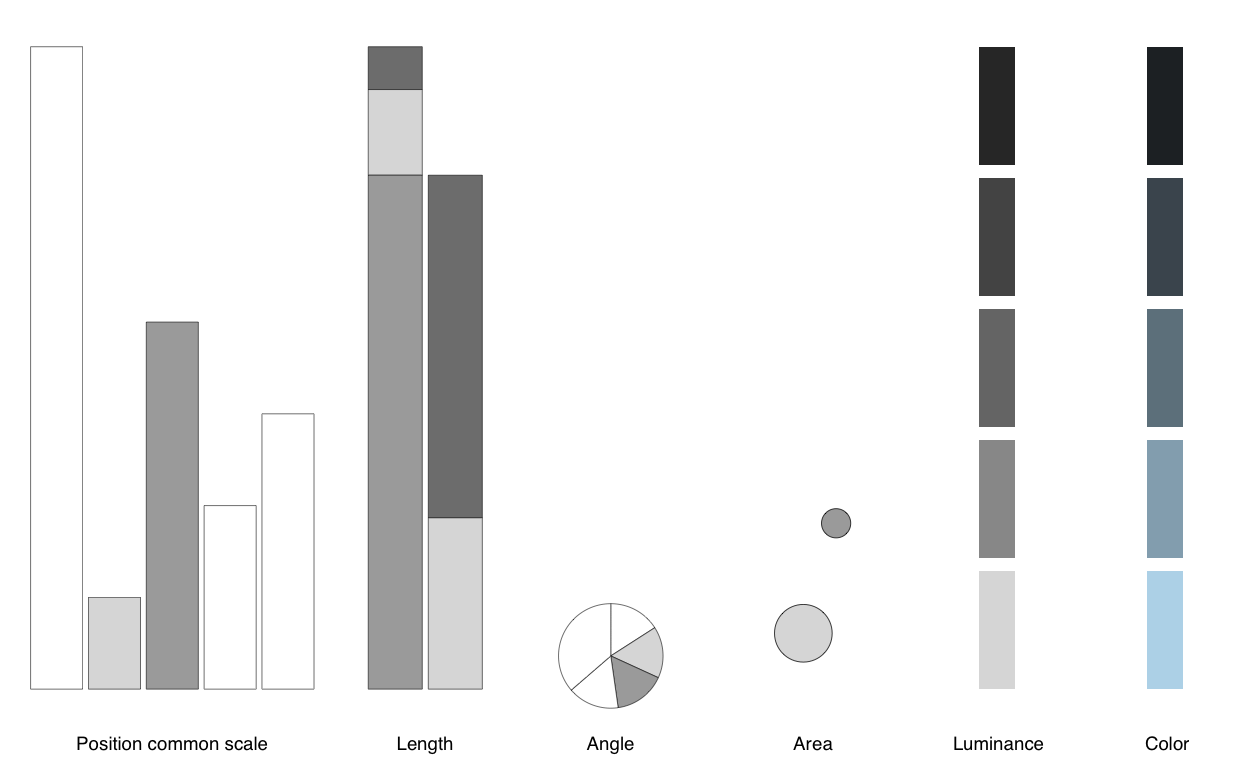

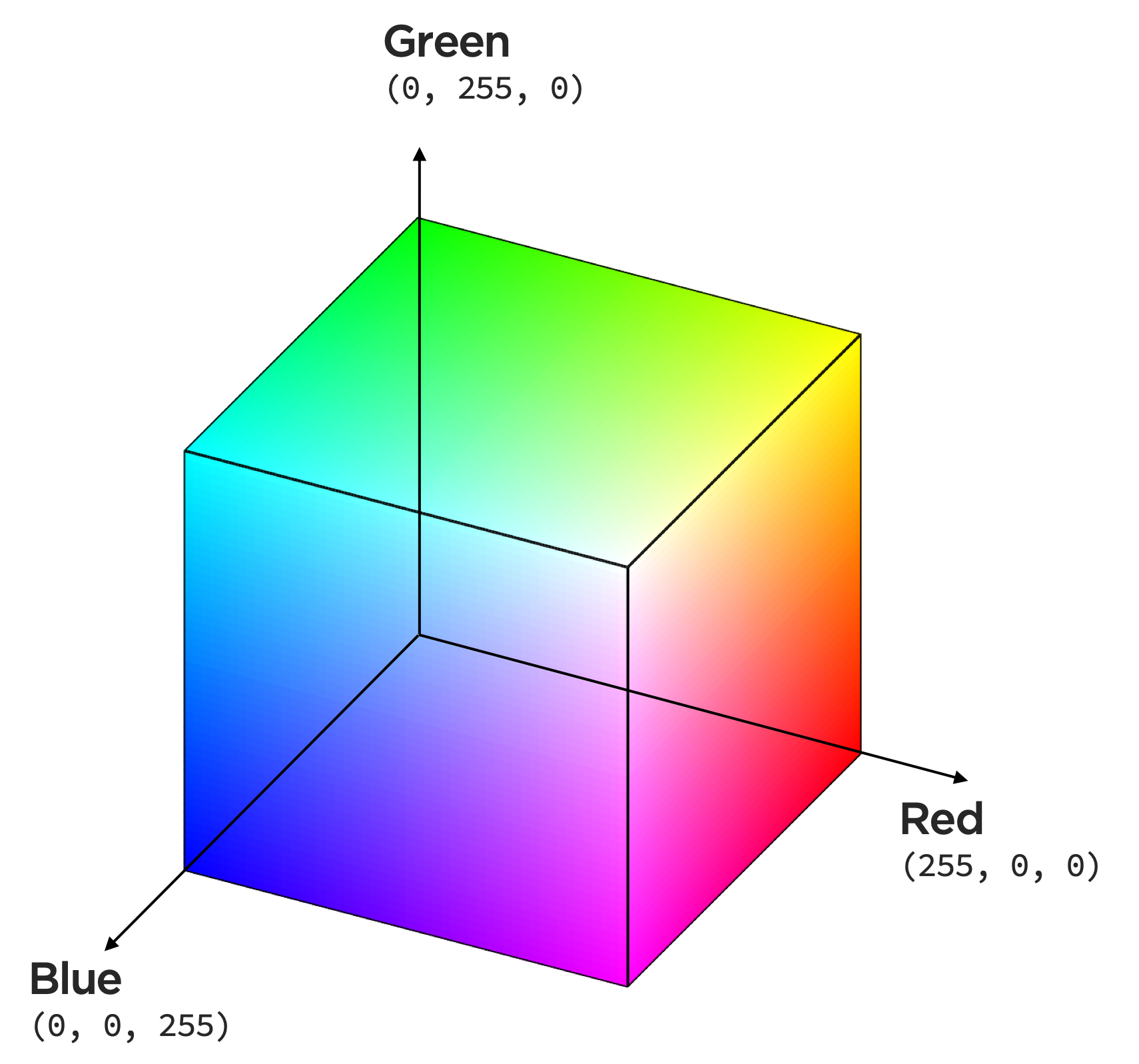

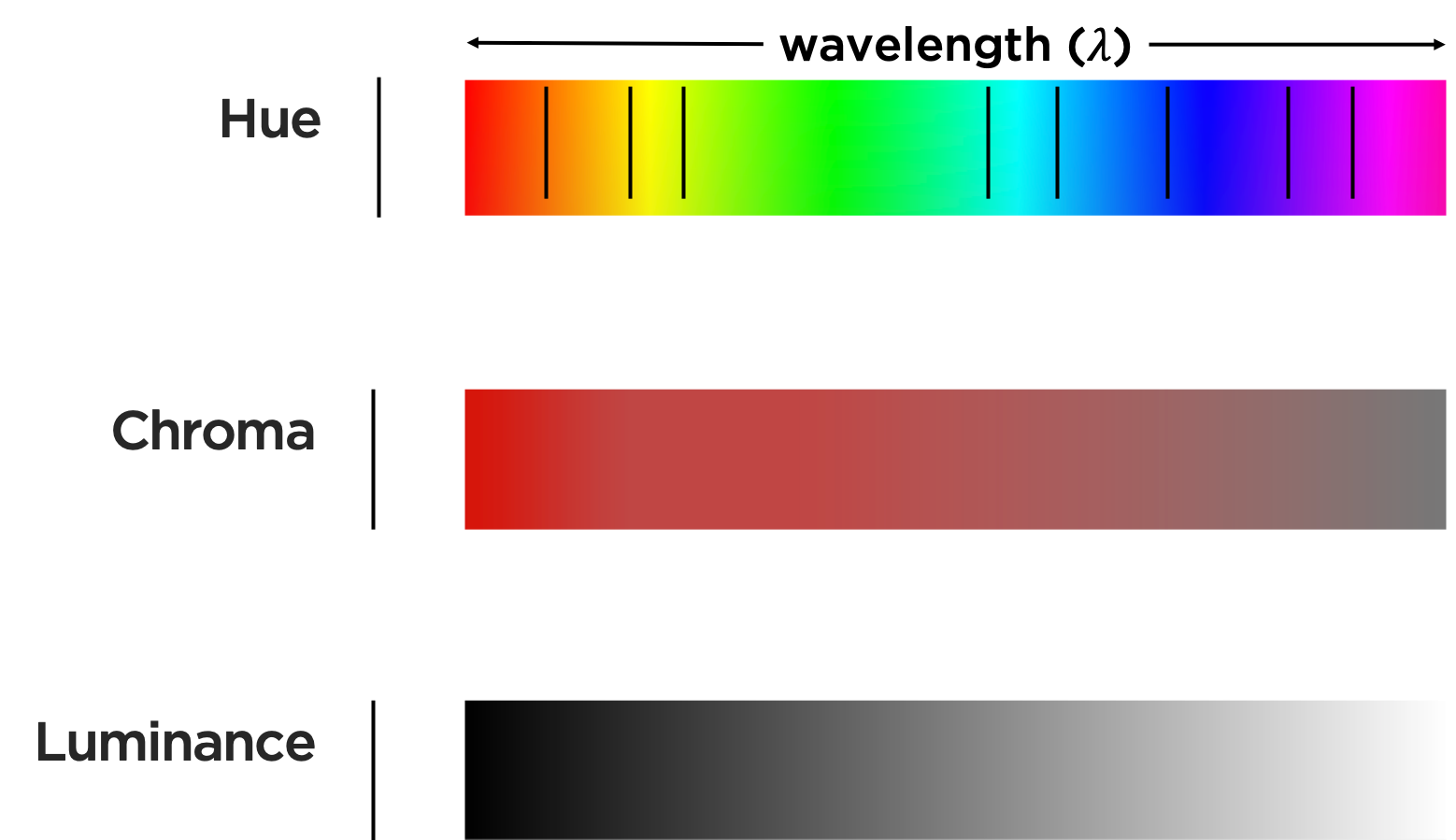

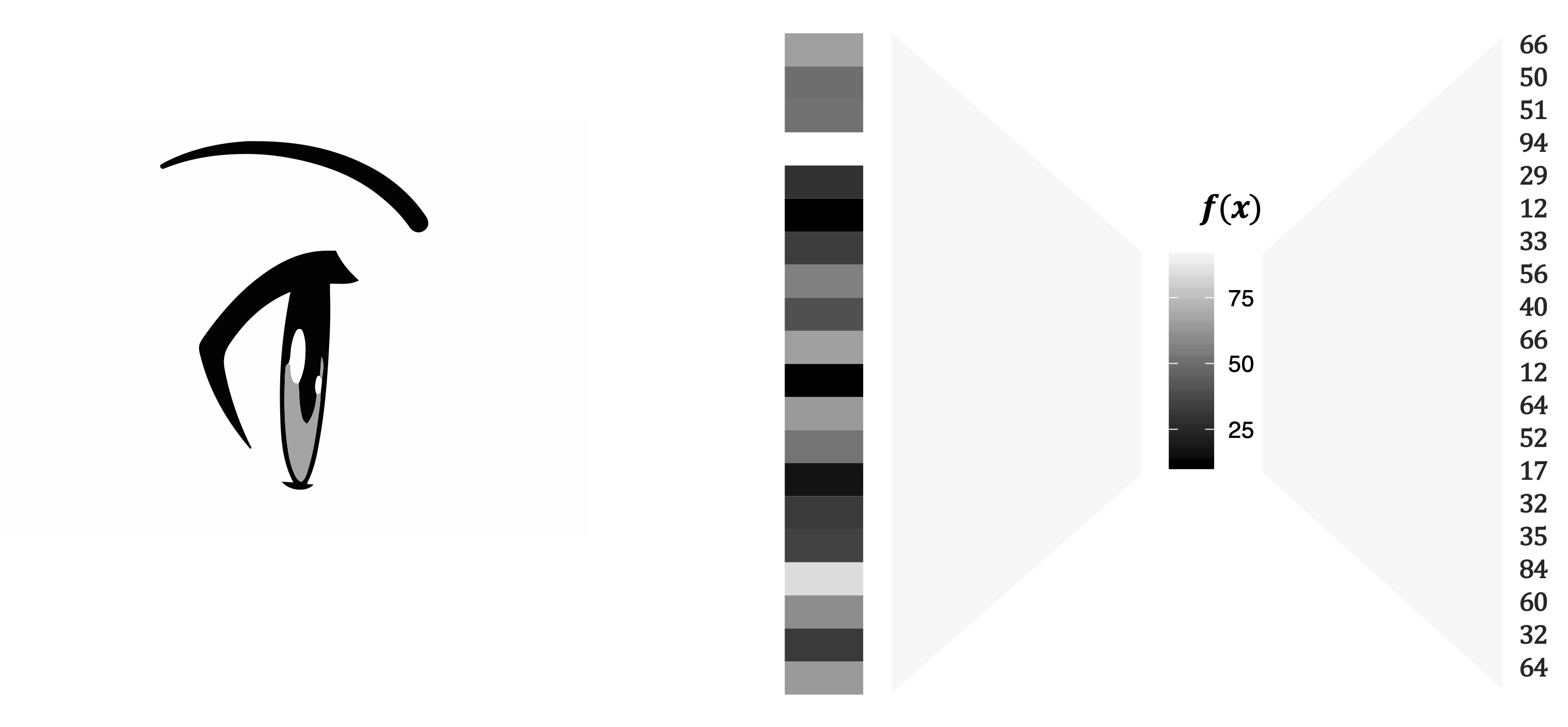

Today’s tools for exploratory data analysis frequently begin by encoding data as graphics, thanks first to John Tukey, who pioneered the field in Tukey (1977), and subsequent, and applied, work in graphically exploring statistical properties of data. Cleveland (1985), the first of Cleveland’s texts on exploratory graphics, considers basic principles of graph construction (e.g., terminology, clarifying graphs, banking, scales), various graphical methods (logarithms, residuals, distributions, dot plots, grids, loess, time series, scatterplot matrices, coplots, brushing, color, statistical variation), and perception (superposed curves, color encoding, texture, reference grids, banking to 45 degrees, correlation, graphing along a common scale). His second book, Cleveland (1993), builds upon learnings of his first, and explores univariate data (quantile plots, Q-Q plots, box plots, fits and residuals, log and power transformations, etcetera), bivariate data (smooth curves, residuals, fitting, transforming factors, slicing, bivariate distributions, time series, etcetera), trivariate data (coplots, contour plots, 3-D wireframes, etcetera), hypervariate data (using scatterplot matrices and linking and brushing). Chambers (1983) in particular explores and compares distributions, explicitly considers two-dimensional data, plots in three or more dimensions, assesses distributional assumptions, and develops and assesses regression models. These three are all helpfully abstracted from any particular programming language2. Unwin (2016) applies exploratory data analysis using R, examining (univariate and multivariate) continuous variables, categorical data, relationships among variables, data quality (including missingness and outliers), making comparisons, through both single graphics and an ensemble of graphics.

While these texts thoroughly discuss approaches to exploratory data analyses to help data scientists understand their own work, these do not focus on communicating analyses and results to other audiences. In this text and through references to other texts we will cover communication with other audiences.

To effectively use graphics tools for exploratory analysis requires the same understanding, if not the same approach, we need for graphically communicating with others, which we explore, beginning in section ??.

Along with visualization, we can use regression to explore data, as is well explained in the introductory textbook Gelman, Hill, and Ventari (2020), which also includes the use of graphics to explore models and estimates. These tools, and more, contribute to an overall workflow of analysis: Gelman et al. (2020) suggest best practices.

Again, the particular information and its ordering in any communication of these analyses and results depend entirely on our audience. After we begin exploring an example data analysis project, and consider workflow, we will consider audience.

1.1.2 Applying project scope: Citi Bike

Let’s develop the concept of project scope in the context of an example, one to help the bike share sponsored by Citi Bike.

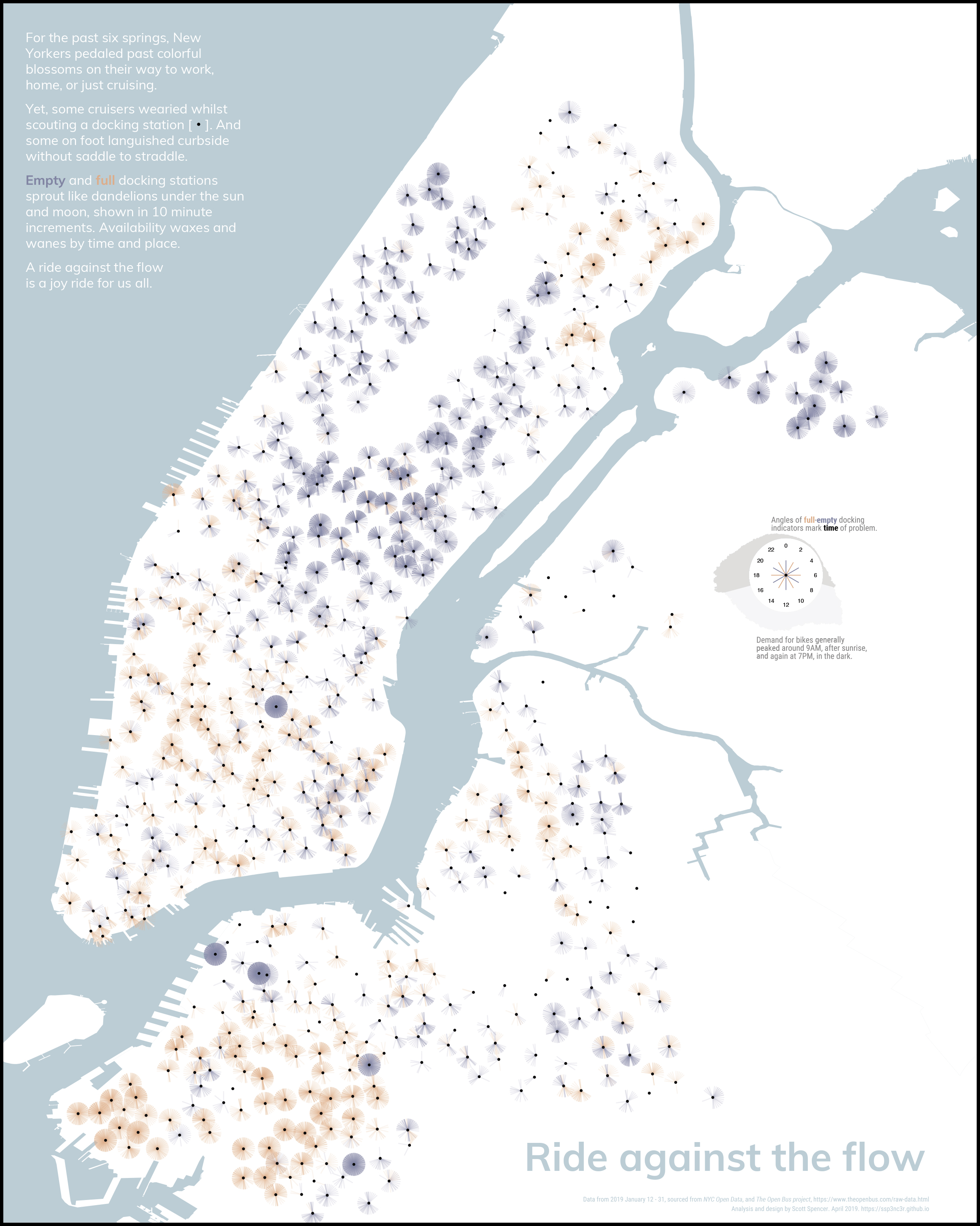

You may have heard about, or even rented, a Citi Bike in New York City. Researching the history, we learn that in 2013, the New York City Department of Transportation sought to start a bike share to reduce emissions, road wear, congestion, and improve public health. After selecting an operator and sponsor, the Citi Bike bike share was established with a bike fleet distributed over a network of docking stations throughout the city. The bike share allows customers to unlock a bike at one station and return it at any other empty dock.

Might this be a problem we can find available data and conduct analyses to inform the City’s actions and further its goals?

Exercise 1 Explore the availability of bikes and docking spots as depending on users’ patterns and behaviors, events and locations at particular times, other forms of transportation, and on environmental context. What events may be correlated with or cause empty or full bike docking stations? What potential user behaviors or preferences may lead to these events? From what analogous things could we draw comparisons to provide context? How may these events and behaviors have been measured and recorded? What data are available? Where are it available? In what form? In what contexts are the data generated? In what ways may we find incomplete or missing data, or other errors in the stored measurements? May these data be sufficient to find insights through analysis, useful for decisions and goals?

Answers to questions as these provide necessary material for communication. Before digging into an analysis, let’s discuss two other aspects of workflow — reproducibility and code clarity.

1.1.3 Workflow for credible communication

Truth is tough. It will not break, like a bubble, at a touch; nay, you may kick it about all day, like a football, and it will be round and full at evening (Holmes 1894).

To be most useful, reproducible work must be credibly truthful, which means that our critics can test our language, our information, our methodologies, from start to finish. That others have not done so led to the reproducibility crisis noted in (Baker 2016):

More than 70% of researchers have tried and failed to reproduce another scientist’s experiments, and more than half have failed to reproduce their own experiments.

By reproducibility, this meta-analysis considers whether replicating a study resulted in the same statistically significant finding (some have argued that reproducibility as a measure should compare, say, a p-value across trials, not whether the p-value crossed a given threshold in each trial). Regardless, we should reproducibly build our data analyses like Holmes’s football, for our critics (later selves included) to kick it about. What does this require? Ideally, our final product should include all components of our analysis from thoughts on our goals, to identification of — and code for — collection of data, visualization, modeling, reporting and explanations of insights. In short, the critic, with the touch of her finger, should be able to reproduce our results from our work. Perhaps that sounds daunting. But with some planning and use of modern tools, reproducibility is usually practical. Guidance on assessing reproducibility and a template for reproducible workflow is described by Kitzes and co-authors (Kitzes, Turek, and Deniz 2018), along with a collection of more than 30 case studies. The authors identify three general practices that lead to reproducible work, to which I’ll add a fourth:

Clearly separate, label, and document all data, files, and operations that occur on data and files.

Document all operations fully, automating them as much as possible, and avoiding manual intervention in the workflow when feasible.

Design a workflow as a sequence of small steps that are glued together, with intermediate outputs from one step feeding into the next step as inputs.

The workflow should track your history of changes.

Several authors describe modern tools and approaches for creating a workflow that leads to reproducible research supporting credible communication, see (Gandrud 2020) and (Healy 2018a).

The workflow should include the communication. And the communication includes the code. What? Writing code to clean, transform, and analyze data may not generally be thought of as communicating. But yes! Code is language. And sometimes showing code is the most efficient way to express an idea. As such, we should strive for the most readable code possible. For our future selves. And for others. For code style advice, consult Boswell and Foucher (2011) and an update to a classic, Thomas and Hunt (2020).

1.2 Audiences and challenges

1.2.1 Audiences

Sometimes in analytics we write for ourselves, part and parcel to analysis. In another context, writer Joan Didion captured this well:

I write entirely to find out what I’m thinking, what I’m looking at, what I see, and what it means (Didion 1976).

But our introspectives are not optimal for others.

1.2.1.1 Analytics executives

Let’s imagine, for a moment, we share the mind of Citi Bike’s Chief Analytics Officer. We know what the public experiences with our bike sharing program as has, too, been reported in the news. The newspaper West Side Rag, for example, quoted Dani Simmons, our program’s spokeswoman. She explained: “Rebalancing is one of the biggest challenges of any bike share system, especially in … New York where residents don’t all work a traditional 9-5 schedule, and … people work in a variety of other neighborhoods” (Friedman 2017). As a Chief Analytics Officer, one of our jobs includes analyzing, and overseeing the analyses of, data that inform decisions for solving problems for our organization (Zetlin 2017), like rebalancing.

Would it interest us if we opened an email or memo from one of our data analysts that began:

Citi Bike, a bike sharing program, has struggled to rebalance its bikes. By rebalance, I mean taking actions that ensure customers may both rent bikes and park them at the bike sharing program’s docking stations….

Are we — Citi Bike’s Chief Analytics Officer — motivated to continue reading? Do we know why we should, or whether we should spend our time on other matters? What might we think of the data analyst from whom we received that communication?

Returning, now, to our own minds: for what audience(s), if any, might such a beginning be interesting or helpful? How can we assess whether the communication is appropriate, even optimized, and if not, adjust it to be so? That’s the focus of this text.

As we hone our skills for communicating with intended audiences, we’ll consider other minds, too: executives in analytics, marketing, chief executives, both individually and mixed with secondary or more general audiences. Consider Scott Powers, Director of Quantitative Analytics at the Los Angeles Dodgers, who earned his doctorate in statistics from Stanford University, publishes research in machine learning, codes in R, among other languages, and has worked for the Dodgers for several years.

1.2.1.2 Marketing executives

A Chief Marketing Officer shares some responsibilities with the analytics officer and other executives, while other responsibilities are primarily her own. Meet David Carr, Director of Marketing Strategy and Analysis at Digitas (a marketing agency) who has written to, and about, his marketing colleagues and their uses and misuses of data. Broadly, he or she leads responses to changing circumstances; shapes products, sales strategies, and marketing ideas, collaborating across the company.

Carr (2019) describes three main types of value that marketing drives:

- business value: long and near-term growth, greater efficiency and enhanced productivity

- consumer value: attitudes and behaviors that effect brand choice, frequency and loyalty

- cultural value: shared beliefs that create a favorable environment in which to operate and influence

He illustrates his research of, and experience with, these values graphically, as a central circle, and in concentric rings identifies various characteristics and details related to these values.

Relatedly, Carr (2016) has mapped out the details for designing and managing a brand, and explained its interconnections:

The brand strategy should be influenced by the business strategy and should reflect the same strategic vision and corporate culture. In addition, the brand identity should not promise what the strategy cannot or will not deliver. There is nothing more wasteful and damaging than developing a brand identity or vision based on strategic imperative that will not get funded. An empty promise is worse than no promise.

We can tie many aspects of brand building and marketing value to measurements and data. Carr (2018) explains how marketing does — and should — work with data. His article suggests how we should craft data-driven messages for marketing executives.

1.2.1.3 Chief executives

Typically, the analytics and marketing executives report, directly or indirectly, to the CEO, who has ultimate responsibility to drive the business. Bertrand (2009) reviews empirical studies on the characteristics of CEOs. They write, while “modern-day CEOs are more likely to be generalists,” more than one quarter of those running fortune 500 companies have earned an MBA. The core educational components of the MBA program at Columbia, for example, include managerial statistics, business analytics, strategy formulation, marketing, financial accounting, corporate finance, managerial economics, global economic environment, and operations management.(Columbia University 2020) This type of curricula suggests the CEO’s vocabulary intersects with both analytics and marketing. Indeed, Bertrand explains that “current-day CEOs may require a broader set of skills as they directly interact with a larger set of employees within their organization.” If they are fluent in the basics of analytics and marketing, their responsibilities are both broader and more focused on leading the drive for creating business value. Our communications with the CEO should begin with and remained focused on how the content of our communication helps the CEO with their responsibilities.

In communicating, we should keep in mind that audiences3 have a continuum of knowledge. Everyone is a specialist on some subjects and a non-specialist on others. Moreover, even a group of all specialists could be subdivided into more specialized and less specialized readers. Specialists want details. Specialists want more detail because they can understand the technical aspects, can often use these in their own work, and require them anyway to be convinced. Non-specialists need you to bridge the gap. The less specialized your audience, the more basic information is required to bridge the gap between what they know and what the document discusses: more background at the beginning, to understand the need for and importance of the work; more interpretation at the end, to understand the relevance and implications of the findings.

Exercise 2 Identify a few analytics, marketing, and chief executives, and research their backgrounds. Describe the similarities and differences, comparing the range of skills and experience you find.

1.2.2 Challenges

1.2.2.1 Information gaps

One challenge in communicating data analytics is understanding what we see. For that, we might consider again Didion (1976)’s thoughts as part of our project. We generally revise our written words and refine our thoughts together; the improvements made in our thinking and improvements made in our writing reinforce each other (Schimel 2012). Clear writing signals clear thinking. To test our project, then, we should clarify it in writing. Once it is clear, we can begin the processes of data collection, further clarify our understanding, begin technical work, again clarify our understanding, and continuing the iterative process until we converge on interesting answers that support actions and goals.

More overlooked, to be explored here, is communicating our project effectively to others. Consider the skills typically needed for an analytics project. The qualities we need in an analytics team, writes Berinato (2018), include project management, data wrangling, data analysis, subject expertise, design, and storytelling. For that team to create value, they must first ask smart questions, wrangle the relevant data, and uncover insights. Second, the team must figure out — and communicate — what those insights mean for the business.

These communications can be challenging, however, as an interpretation gap frequently exists between data scientists and the executive decision makers they support, see (Maynard-Atem and Ludford 2020) and (Brady, Forde, and Chadwick 2017).

How can we address such a gap?

Brady and his co-authors argue that data translators should bridge the gap, address data hubris and decision-making biases, and find linguistic common ground. Subject-matter experts should be taught the quantitative skills to bridge the gap because, they continue, it is easier to teach quantitative theory than practical, business experience.

Before delving into the above arguments, let’s first consider from what perspective we’re reading. Both perspectives are written for business executives, Berinato writes in the Harvard Business Review, Brady and his co-authors write from MIT Sloan Management Review. According to HBR, their “readers have power, influence, and potential. They are senior business strategists who have achieved success and continue to strive for more. Independent thinkers who embrace new ideas. Rising stars who are aiming for the top” (“HBR Advertising and Sales,” n.d.). Similarly, MIT Sloan Management Review reports their audience: “37% of MIT SMR readers work in top management, while 72% confirm that MIT SMR generates a conversation with friends or colleagues” (“Print Advertising Opportunities” 2020). Further, all authors are in senior management. Berinato is senior editor. Brady and co-authors are consultants focusing on sports management. Why might it be important we know both an author’s background and their intended audience?

Perhaps it is not surprising for a senior executive to conclude that it would be easier to teach data science skills to a business expert than to teach the subject of a business or field to those already skilled in data science. Is this generally true? Might the background of a data translator depend upon the type of business or type of data science? Is it appropriate for this data translator to be an individual? Berinato argues that data science work requires a team. Might the responsibility of a data translator be shared?

Bridging the gap requires developing a common language. Senior management do not all use the same vocabulary and terms as analysts. Decision makers seek clear ways to receive complex insights. Plain language, aided by visuals, allow easier absorption of the meaning of data. Along with common language, data translators should foster better communication habits. Begin with questions, not assertions. Then, use analogies and anecdotes that resonate with decision makers. Finally, whomever fills this role, they must hone their skills, skills that include business and analytics knowledge, but also must learn to speak the truth, be constantly curious to learn, craft accessible questions and answers, keep high standards and attention to detail, be self-starters.

1.2.2.2 Multiple or mixed audiences

Frequently we encounter mixed audiences. Audiences are multiple, for each reader is unique. Still, readers can usefully be classified in broad categories on the basis of their proximity both to the subject matter (the content) and to the overall writing situation (the context). Primary readers are close to the situation in time and space. Uncertainty of the knowledge of a reader is like having a mixed audience, one knowing more than the other. Writing for a mixed audience is, thus, quite challenging. That challenge to write for a mixed audience is to give secondary readers information that we assume the primary readers know already while keeping the primary reader interested. The solution, conceptually, is simple: just ensure that each sentence makes an interesting statement, one that is new to all readers — even if it includes information that is new to secondary readers only. Thus, make each sentence interesting for all audiences. Let’s consider Doumont (2009d)’s examples for incorporating mixed audiences. The first sentence in his example,

We worked with IR.

may not work because IR may be unfamiliar to some in the audience. One might try to fix the issue by defining the word or, in this case, the acronym:

We worked with IR. IR stands for information Resources and is a new department.

But that isn’t ideal either because those who already know the meaning aren’t given new information. It is, in fact, pedantic. The better approach is to weave additional information, like a definition, into the information that the specialist also finds interesting, like so:

We worked with the recently launched Information Resources (IR) department.

We’ll consider these within the context of effective business writing.

1.2.3 The utility of decisions

For data analysis to grab an audience’s attention, the communication of that analysis should answer their question, “so what?” Now that the audience knows what you’ve explained, what should come of it? What does it change?

To get to such an answer we need to think about the expected utility of the information in terms of that so what. This idea, is a more formal quantification driving towards purpose for a particular audience. We’ll just introduce a few concepts without details here as this topic is advanced, given its placement in our text’s sequencing, but it’s important for future reference to have an awareness that these concepts exist.

We can combine probability distributions of expected outcomes and the utility of those outcomes to enable rational decisions (Parmigiani 2001); (Gelman et al. 2013, chap. 9). In simple terms, this means we can decide how much each possible outcome is worth and multiply that by the probability that each outcome happens. Then, we, or our audience, can choose the “optimal” one. Slightly more formally, optimal decisions choose an action that maximizes expected utility (minimizes expected loss), where the expectation is computed using a posterior distribution.

Model choice is a special case of decision-making that uses a zero-one loss function: the loss is zero when we choose the correct model, and one otherwise. Beyond model selection, a business may use as a loss function, say, for its choice of actions that maximize expected profits arising from those actions. In more general, mathematical notation, we integrate over the product of the loss function and posterior distribution,

\[\begin{equation} \min_a\left\{ \bar{L}(a) = \textrm{E}[L(a,\theta)]= \int{L(a,\theta)\cdot p(\theta \mid D)\;d\theta} \right\} \end{equation}\]where \(a\) are actions, \(\theta\) are unobserved variables or parameters, and \(D\) are data. The seminal work by von Neumann and Morgenstern (2004) set forth the framework for rational decision-making, and J. O. Berger (1985) is a classic textbook on the intersection of statistical decision theory and Bayesian analysis. Of note, the analyses of either-or decisions, and even sequential decisions can be fairly straight-forward. Complexity grows, however, when multiple objectives or agents are involved.

Communicating our results in terms of the utility for decisions will help us bridge the gap from analysis to audience. Again, utility is an advanced topic for its placement in this text. Just have some awareness that we can inform decisions through such a process and, to the extent these details are vague, don’t worry. Move on for now.

1.3 Elements of writing

1.3.1 Purpose, audience, and craft

As we prepare to scope, and work through, a data analytics project, we must communicate variously if it is to have value. The reproducible workflow shown in the last chapter at least provides value as a communication to its immediate audience — its authors, as a reference for what they accomplished — and to those with similar needs4 to understand and critique the logical progression and scope of the project. That form of communication, however, will be less valuable than other communication forms for different audiences and purposes. Given the information compiled from our project — the content — we now consider communicating various aspects for other purposes and audiences.

The importance of adjusting communication is not unique to data analytics. Let’s consider, say, how communication form differs when for a news story versus an op-ed. Long-time editor of the op-ed at the New York Times explains,

The approach to argument that I learned in classes at Berkeley was much more similar to an op-ed than the inverted pyramid of daily journalism or the slow, anecdotal flow of feature stories that had dominated my professional life (Hall 2019).

The qualities of an op-ed piece must be, she writes: “surprising, concrete, and persuasive.” These qualities are similar to that we need in business communication, which generally drive decisions. All business writing begins with a) identifying the purpose for communicating and b) understanding your audiences’ scopes of knowledge and responsibilities in the problem context. Neither is trivial; both require research. To motivate this discussion, let’s consider and deconstruct two example memos — one for Citi Bike, the other for the Dodgers — written for data science projects. It will be helpful for this exercise to place both memos side-by-side for comparison as we work through them below.

1.3.1.1 Communication structure

Let’s begin discussing the communication structure from several perspectives: purpose, narrative structure, sentence structure, and effective redundancy through hierarchy. Then, we consider audiences, story, and the importance of revision.

1.3.1.1.1 Purpose and audience

In the first example, we return to Citi Bike. After project ideation, and scoping, we want to ask Citi Bike’s head of data analytics to let us write a more detailed proposal to conduct the data analytics project. We accomplish this in 250 words. The the fully composed, draft memo follow,

Example 1 (250-word Citi Bike memo)

Michael Frumin

Director of Product and Data Science

for Transit, Bikes, and Scooters at Lyft

To inform the public on rebalancing, let’s re-explore docking

availability and bike usage, this time with subway and weather.

Let’s re-explore station and ride data in the context of subway and weather information to gain insight for “rebalancing,” what our Dani Simmons explains is ”one of the biggest challenges of any bike share system, especially in … New York where residents don’t all work a traditional 9-5 schedule, and though there is a Central Business District, it’s a huge one and people work in a variety of other neighborhoods as well” (Friedman 2017).

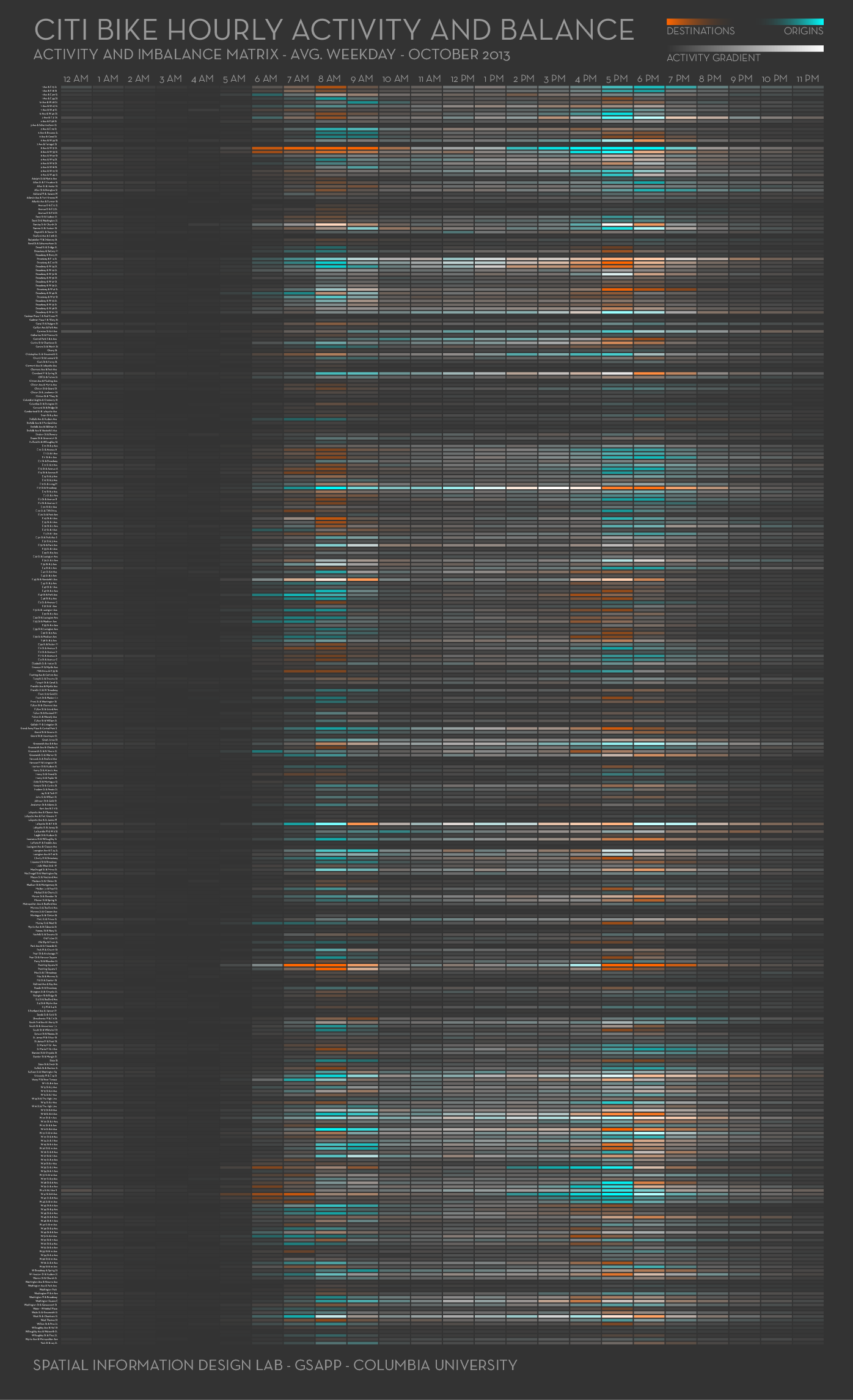

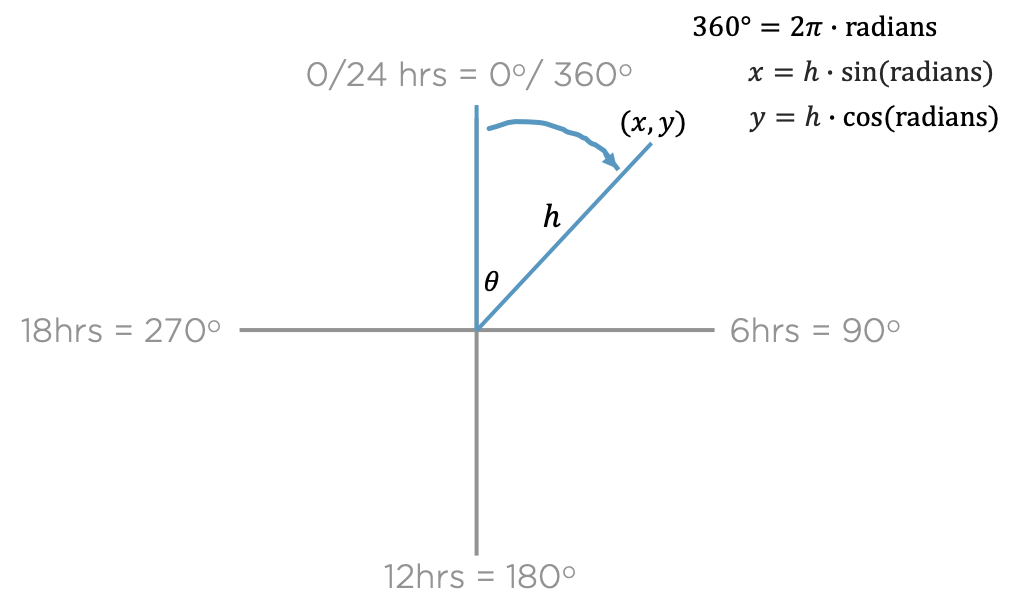

Recalling the previous, public study by Columbia University Center for Spatial Research (Saldarriaga 2013), it identified trends in bike usage using heatmaps. As those visualizations did not combine dimensions of space and time, which the public would find helpful to see trends in bike and station availability by neighborhood throughout a day, we can begin our analysis there.

We’ll use published data from NYC OpenData and The Open Bus Project, including date, time, station ID, and ride instances for all our docking stations and bikes since we began service. To begin, we can visually explore the intersection of trends in both time and location with this data to understand problematic neighborhoods and, even, individual stations, using current data.

Then, we will build upon the initial work, exploring causal factors such as the availability of alternative transportation (e.g., subway stations near docking stations) and weather. Both of which, we have available data that can be joined using timestamps.

The project aligns with our goals and shows the public that we are, in Simmons’s words, “innovative in how we meet this challenge.” Let’s draft a detailed proposal.

Sincerely,

Scott Spencer

Friedman, Matthew. “Citi Bike Racks Continue to Go Empty Just When Upper West Siders Need Them.” News. West Side Rag (blog), August 19, 2017. https://www.westsiderag.com/2017/08/19/citi-bike-racks-continue-to-go-empty-just-when-upper-west-siders-need-them.

Saldarriaga, Juan Francisco. “CitiBike Rebalancing Study.” Spatial Information Design Lab, Columbia University, 2013. https://c4sr.columbia.edu/projects/citibike-rebalancing-study.

It begins, in the title of this memo, with our purpose of writing, to conduct data analysis on specifically identified data to inform the issue of rebalancing, one of Citi Bike’s goals:

To inform rebalancing, let’s explore docking and bike availability in the context of subway and weather information.

This is what Doumont (2009d) calls a message. We should craft communications with messages, not merely information. Doumont explains that a message differs from raw information in that it presents “intelligent added value,” that is, something to understand about the information. A message interprets the information for a specific audience and for a specific purpose. It conveys the so what, whereas information merely conveys the what. What makes our title a message? Before answering this, let’s compare one of Doumont’s examples of information to that of a message. This sentence is mere information:

A concentration of 175 \(\mu g\) per \(m^3\) has been observed in urban areas.

A message, in contrast to information, would be the so what:

The concentration in urban areas (175 \(\mu g / m^3\)) is unacceptably high.

In our title, we request an action, approval for the exploratory analysis on specified data, for a particular purpose, to inform rebalancing. This purpose also implies the so what: unavailable bikes or docking slots, unbalanced stations, are bad. We’re asking to help remedy the issue.

This beginning, if effective, is only because we wrote it for a particular audience. Our audience is head of data analytics at Citi Bike, and presumably knows the problem of rebalancing; it is well-known in, and beyond, the organization. Thus, our sentence implicitly refers back to information our audience already knows5. Relying on his or her knowledge means we do not need to first explain what rebalancing is or why it is a problem.

Let’s introduce a second example before digging further into the structure of the Citi Bike memo. Having multiple examples to analyze has the added benefit of allowing us to induce some general, but effective, writing principles.

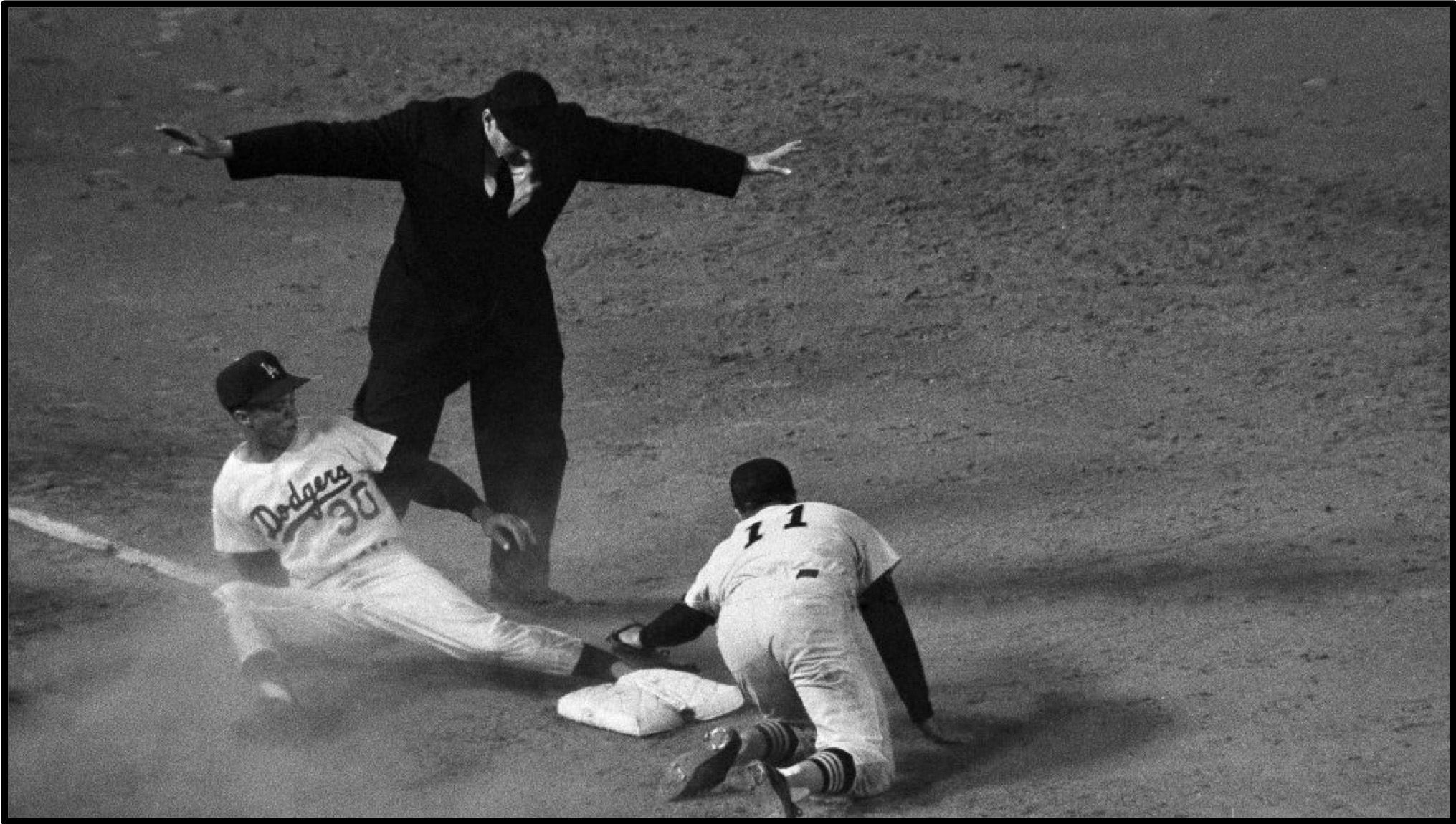

Considering a second example, professional teams in the sport of baseball, including the Los Angeles Dodgers, make strategic decisions within the boundaries of the sport’s rules for the purpose of winning games. One of those rules involves stealing bases, as in figure 3.

Figure 3: In a close call, the baseball umpire spread his arms, signaling that the Dodgers baserunner successfully ran to the base faster than the catcher could throw the ball to the base to get him out — the runner stole the base. Knowing when to try to steal is strategic and depends on other events that are at least partly measured as data.

This concept is part of our next example, written to the Los Angeles Dodgers’s Director of Quantitative Analytics, Scott Powers. Powers, is Director of Quantitative Analytics at the Los Angeles Dodgers. He earned his doctor of philosophy in statistics from Stanford, has authored publications in machine learning, knows R programming, and as an employee of the Dodgers, knows their history. Powers manages a team of data scientists; their responsibilities include assessing player and team performance. The draft memo follows,

Example 2 (250-word Dodgers memo)

Scott Powers

Director of quantitative analysis

at Los Angeles Dodgers

Our game decisions should optimize expectations.

Let’s test the concept by modeling decisions to steal.

Our Sandy Koufax pitched a perfect game, the most likely event sequence, only once: those, we do not expect or plan. Since our decisions based on other most likely events don’t align with expected outcomes, we leave wins unclaimed. To claim them, let’s base decisions on expectations flowing from decision theory and probability models. A joint model of all events works best, but we can start small with, say, decisions to steal second base.

After defining our objective (e.g. optimize expected runs) we will, from Statcast data, weight everything that could happen by its probability and accumulate these probability distributions. Joint distributions of all events, an eventual goal, will allow us to ask counterfactuals — “what if we do this” or “what if our opponent does that” — and simulate games to learn how decisions change win probability. It enables optimal strategy.

Rational and optimal, this approach is more efficient for gaining wins. For perspective, each added win from the free-agent market costs 10 million, give or take, and the league salary cap prevents unlimited spend on talent. There is no cap, however, on investing in rational decision processes.

Computational issues are being addressed in Stan, a tool that enables inferences through advanced simulations. This open-source software is free but teaching its applications will require time. To shorten our learning curve, we can start with Stan interfaces that use familiar syntax (like lme4) but return joint probability distributions: R packages rethinking, brms, or rstanarm. Perfect games aside, we can test the concept with decisions to steal.

Sincerely,

Scott Spencer

The beginning of this memo reads:

Our game decisions should optimize expectations. Let’s test the concept by modeling decisions to steal.

In the first three words, the subject of this sentence, signals to our audience something they have experience with: our game decisions. It’s familiar. Then, we provide a call to action: our game decisions should optimize expectations. Let’s test the concept by modeling decisions to steal. As with the Citi Bike memo (example 1), the Dodgers memo (example 2) begins with a message and stated purpose. And as with Citi Bike, the information in the title message of the Dodgers memo is shared knowledge with our audience. In fact, we rely on the educational background of our audience, who we know has earned a doctor of philosophy in statistics, when including the concept to “optimize expectations”6 without first explaining what that is because we know, or from the audience’s background, can assume the audience understands the concept.

So in both cases, we have begun with language and topics already familiar to the audience, which follows the more general writing advice from Doumont (2009d) , who instructs us to

put ourselves in the shoes of the audience, anticipating their situation, their needs, their expectations. Structure the story along their line of reasoning, recognizing the constraints they might bring: their familiarity with the topic, their mastery of the language, the time they can free for us.

What else do we know about chief analytics officers in general? Their jobs require them to be efficient with their time. Thus, by starting with our purpose, letting them know what we want them to do, we are considerate of their “constraints” and “time they can free for us.”

Beginning with a purpose and call-to-action also allow the executive to understand the memo’s relevance to them, in terms of their decision-making, immediately; they have a reason to continue reading.

The persuasive power of beginning with the main message for your audience, or issue relevant to your audience, is nearly as timeless as it is true. Cicero, the Roman philosopher with a treatise on rhetoric, explained that we must not begin with details because “it forms no part of the question, and men are at first desirous to learn the very point that is to come under their judgment” (Cicero and Watson 1986).

Next, let’s review the structure of these memos to see whether we’ve “structur[ed] the story along their line of reasoning.”

1.3.1.1.2 Common ground

Let’s compare the first sentences of the body of both examples. The Citi Bike memo begins,

Let’s re-explore station and ride data in the context of subway and weather information to gain insight for “rebalancing,” broadening the factors we’ve told the public that “one of the biggest challenges of any bike share system, especially in … New York where residents don’t all work a traditional 9-5 schedule, and though there is a Central Business District, it’s a huge one and people work in a variety of other neighborhoods as well” (Friedman 2017).

This sentence starts with the title request, and then ties the purpose — rebalancing — to corporate goals. It does so by quoting the company’s spokesperson, which serves as both evidence of the so what. Offering, and accepting, Simmons’s quote serves a second purpose in writing: it helps to establish common ground with our audience.

If we want to affect the behaviors and beliefs of the person in front of us, we need to first understand what goes on inside their head and establish common ground. Why? When you provide someone with new data, they quickly accept evidence that confirms their preconceived notions (what are known as prior beliefs) and assess counter evidence with a critical eye (Sharot 2017). Four factors come into play when people form a new belief: our old belief (this is technically known as the “prior”), our confidence in that old belief, the new evidence, and our confidence in that evidence. Focusing on what you and your audience have in common, rather than what you disagree about, enables change. Let’s check for common ground in the Dodgers memo. The first sentence of the body begins,

Our Sandy Koufax pitched a perfect game, the most likely event sequence, only once: those, we do not expect or plan.

Sandy Koufax is one of the most successful Dodgers players in the history of the franchise. He is one of less than 20 pitchers in the history of baseball to pitch a perfect game, something extraordinary. Our audience, as an employee of the Dodgers, will be familiar with this history. It is also something very positive — and shared — between author and audience. It is intended to establish common ground, in two ways. Along with that positive, shared history, it sets up an example of a statistical mode, one that we know the audience would agree is unhelpful for planning game strategy because it is too rare, even if it is a statistical mode. It helps to create common ground or agreement that it may not be best to use statistical modes for making decisions.

In both memos, we are also trying to use an interesting fact that may be unexpected or surprising in this context (Sandy Koufax, Dani Simmons) to grab our audience’s attention. In journalism, this is one way to create the lead. Zinsser (2001)7 explains that the most important sentence in any communication is the first one. If it doesn’t induce the reader to proceed to the second sentence, your communication is dead. And if the second sentence doesn’t induce the audience to continue to the third sentence, it’s equally dead. Readers want to know — very soon — what’s in it for them.

Your lead must capture the audience immediately cajoling them with freshness, or novelty, or paradox, or humor, or surprise, or with an unusual idea, or an interesting fact, or a question. Next, it must provide hard details that tell the audience why the piece was written and why they ought to read it.

1.3.1.1.3 Details

At this point in both memos, we have begun our memo with information familiar to our audience, relevant to their job in decision-making, and established our purpose. We have also started with information they would agree with. We’ve created common ground. The stage is set. What’s next? Here’s the next two sentences in the body of the Citi Bike memo:

Recalling the previous, public study by Columbia University Center for Spatial Research (Saldarriaga 2013), it identified trends in bike usage using heatmaps. As those visualizations did not combine dimensions of space and time, which the public would find helpful to see trends in bike and station availability by neighborhood throughout a day, we can begin our analysis there.

The first sentence introduces previous work — background — on rebalancing studies and its limitations, and we proposed to start where the prior work stopped. This accomplishes two objectives. First, it helps our audience understand beginning details of our proposed project. Second, it helps the audience see that our proposed work is not redundant to what we already know. Thus, we began the details of our proposed solution. What is described in the Dodgers memo at a similar point? This:

To claim them, let’s base decisions on expectations flowing from decision theory and probability models. A joint model of all events works best, but we can start small with, say, decisions to steal second base.

As with Citi Bike, the next two sentences start introducing details of the proposed project.

After introducing the nature of the proposed project in both memos, we identify data that makes the proposed project feasible. In the Citi Bike memo we identify specific categories of data and the publicly available source of those data:

We’ll use published data from NYC OpenData and The Open Bus Project, including date, time, station ID, and ride instances for all our docking stations and bikes since we began service.

Similarly, in the Dodgers memo,

After defining our objective (e.g. optimize expected runs) we will, from Statcast data, weight everything that could happen by its probability and accumulate these probability distributions.

It may seem we are less descriptive of the data than in the Citi Bike memo, but the label “Statcast” signals to our particular audience a group of specific, publicly available variables collected by the Statcast system, see (Willman, n.d.) and (Willman 2020).

After identifying data, we explain how we plan its analysis.

Having identified data, both memos then describe more details of our proposed methodology. In Citi Bike, we discuss two stages. We plan to graphically explore specific variables in search of specific trends first.

To begin, we can visually explore the intersection of trends in both time and location with this data to understand problematic neighborhoods and, even, individual stations, using current data.

Then, we specifically identify additional data we plan to join and explore as causal factors for problem areas:

Then, we will build upon the initial work, exploring causal factors such as the availability of alternative transportation (e.g., subway stations near docking stations) and weather. Both of which, we have available data that can be joined using timestamps.

Similarly, in the Dodgers memo, go into the planned methodology. We plan to model expectations from the data:

…from Statcast data, weight everything that could happen by its probability and accumulate these probability distributions.

1.3.1.1.4 Benefits

Having described our data and methodology in both memos, we now describe some benefits. In the Citi Bike memo,

The project aligns with our goals and shows the public that we are, in Simmons’s words, “innovative in how we meet this challenge.”

And in the Dodgers memo, perhaps because we believe the benefits are comparatively less obvious, or less proven, we further develop them:

Joint distributions of all events, an eventual goal, will allow us to ask counterfactuals — “what if we do this” or “what if our opponent does that” — and simulate games to learn how decisions change win probability. It enables optimal strategy.

Rational and optimal, this approach is more efficient for gaining wins. For perspective, each added win from the free-agent market costs 10 million, give or take, and the league salary cap prevents unlimited spend on talent. There is no cap, however, on investing in rational decision processes.

1.3.1.1.5 Limitations

In the Citi Bike memo, we didn’t identify limitations. Should we?

In the Dodgers memo, we do, while also explaining how we plan to overcome those limitations:

Computational issues are being addressed in

Stan, a tool that enables inferences through advanced simulations. This open-source software is free but teaching its applications will require time. To shorten our learning curve, we can start with Stan interfaces that use familiar syntax (likelme4) but return joint probability distributions:Rpackagesrethinking,brms, orrstanarm.

1.3.1.1.6 Conclusion

Finally, we wrap up in both memos. in the Citi Bike memo, after echoing the quote from Simmons, we state,

Let’s draft a detailed proposal.

Again, the Dodgers memo is similar. There, we circle back to our introduction to Sandy Koufax and his perfect game, then conclude,

Perfect games aside, we can test the concept with decisions to steal.

Again, this idea of echoing something from where we began is journalism’s complement to the lead.

Zinsser explains that, ideally, the ending should encapsulate the idea of the piece and conclude with a sentence that jolts us with its fitness or unexpectedness. Consider bringing the story full circle — to strike at the end an echo of a note that was sounded at the beginning. It gratifies a sense of symmetry.

Executives’ lines of reasoning commonly, but do not always, follow the general document structure described above. If we don’t have information otherwise, this is a good start.

1.3.1.2 Narrative structure

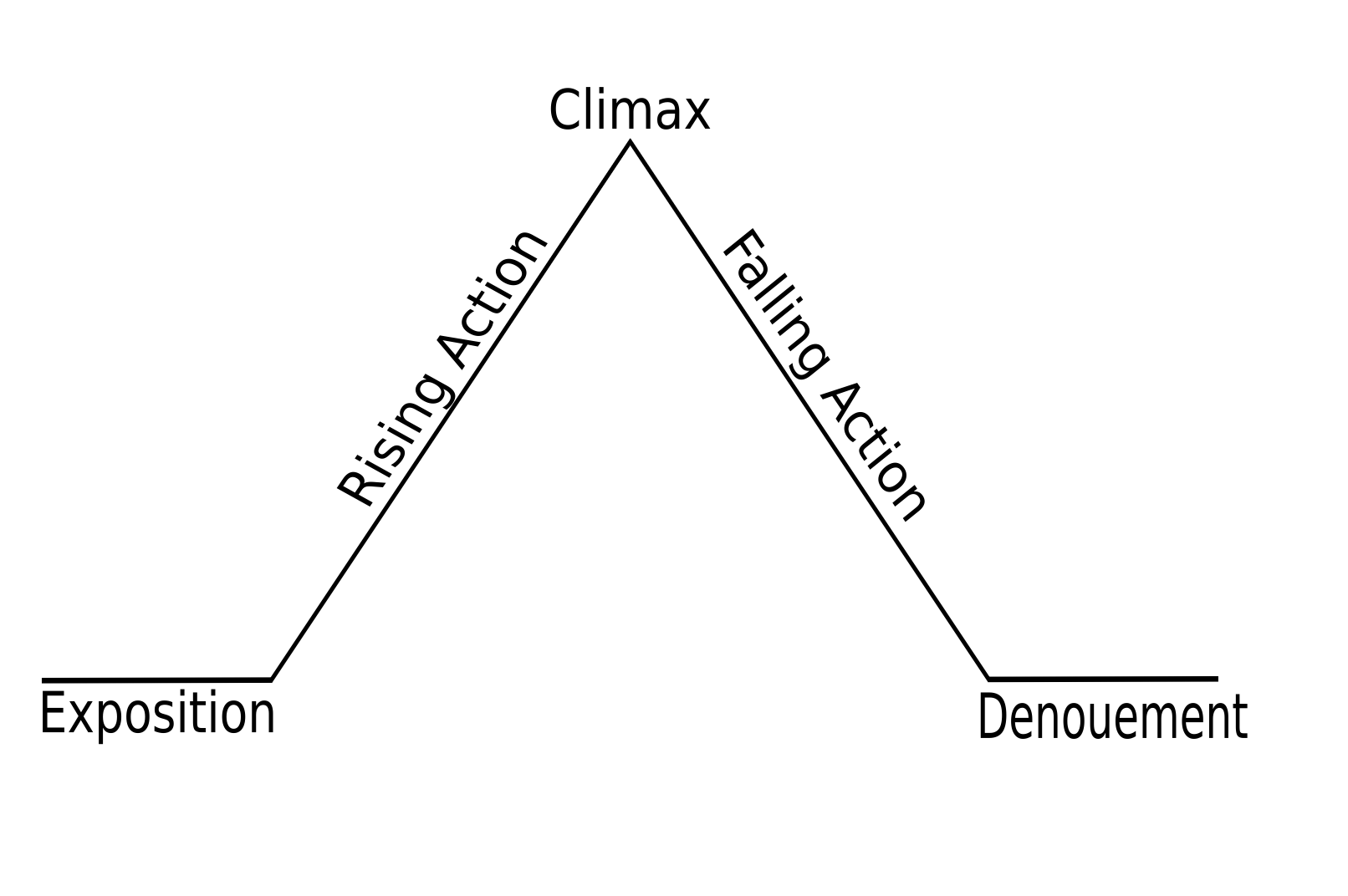

The above ideas — tools — are helpful in structuring and writing persuasive memos, and longer communications for that matter. And as writing lengthens, the next couple of related tools can be especially helpful in refining the narrative structure in a way that holds our audience’s interest by creating tension. German dramatist Gustav Freytag in the late 19th century illustrated a narrative arc8 used in Shakespearean dramas:

The primary elements of an applied analytics project may be thought of as a well-articulated business problem, a data science solution, and a measurable outcome to produce value for the organization. The analytics project may thus be conceptualized as a narrative arc, with a beginning (problem), middle (analytics), and end (overcoming of the problem), along with characters (analysts, colleagues, clients) who play important roles.

Duarte (2010) used the narrative arc to conceptualize an interesting alternative way to think about structure that creates tension: alternating what is with what may be:

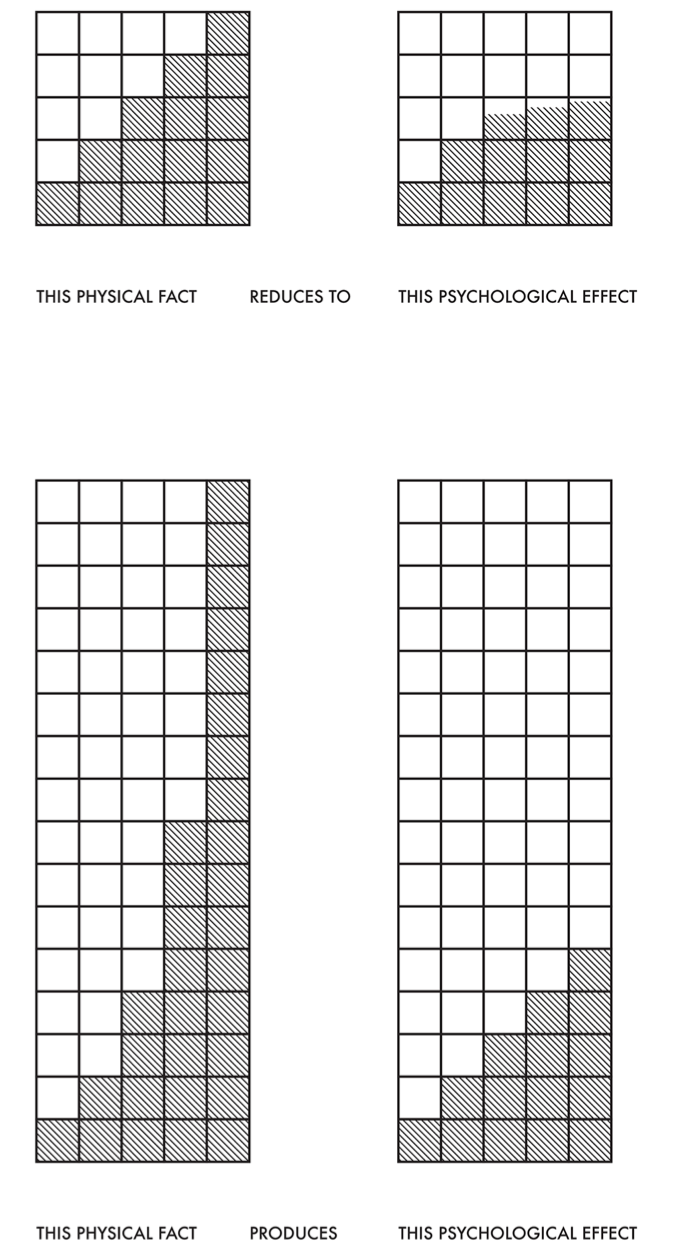

We can repeat this approach, see figure 4, switching between what is and what may be to maintain a sense of tension or interest throughout a narrative arc. Once you become aware, you may be surprised how much you find writing in this form.

Figure 4: Duarte illustrates the repeated switching between what is and what may be, which helps to hold audience interest throughout a narrative.

This juxtaposition of two states for creating tension is another form of comparison.

Exercise 3 Revisit the two memos, examples 1 and 2. Identify sentences or paired sentences that shift focus from what is to what could be, creating a contrast.

Let’s look closer, now, to sentence structure.

1.3.1.3 Sentence structure

When we describe old before new, using sentence structure, it generally improves understanding. The concept has also been described as an information unit. “The information unit is what its name implies: a unit of information. Information, in this technical grammatical sense, is the tension between what is already known or predictable and what is new or unpredictable” (Halliday and Matthiessen 2004). As a general principle, “readers follow a story most easily if they can begin each sentence with a character or idea that is familiar to them, either because it was already mentioned or because it comes from the context” (J. M. Williams, Bizup, and Fitzgerald 2016).

Consider an alternative flow of information. Put new information before old information. Reversing the information flow will likely confuse your audience. This point was clearly demonstrated in a classic movie, Memento,

Figure 5: Poster for Memento, a movie designed to purposefully confuse the audience by narrating the story (partly) in reverse.

where Director Christopher Nolan tells the story of a man with anterograde amnesia (the inability to form new memories) searching for someone who attacked him and killed his wife, using an intricate system of Polaroid photographs and tattoos to track information he cannot remember. The story is presented as two different sequences of scenes interspersed during the film: a series in black-and-white that is shown chronologically, and a series of color sequences shown in reverse order (simulating for the audience the mental state of the protagonist). The two sequences meet at the end of the film, producing one complete and cohesive narrative. Yet, the reversed order is (effectively) designed to hold the audience in confusion so that they may get a sense of the confusion experienced by someone with this illness. Indeed, that we demonstrate this with film implies that ordering in visual representation matters too, and it does. As such, we revisit this in the context of images.

For reasons similar, explain complex information last. This is particularly important in three contexts: introducing a new technical term, presenting a long or complex unit of information, introducing a concept before developing its details. And just as the old—new paradigm helps to convey messages, so too does expressing crucial actions in verbs. Make your central characters the subjects of those verbs; keep those subjects short, concrete, and specific.

Exercise 4 Revisit the Dodgers memo again, example 2. This time, for each sentence and words within the sentence, try to identify whether the word or phrase is new or old. When determining this, consider both the words, phrases, and sentences preceding the one under analysis. Of note, you may also consider the audience’s background knowledge as a form of information.

1.3.1.4 Layering and heirarchy

Most communications benefit by providing multiple levels in which the narrative may be read. Even emails and memos — concise communications — enable two layers, the title and the main body. Thus, the title should inform the audience of the relevance of the communication: what is it the author wants them to do or know. It should also, or at least, invite the audience to learn more through the details of the main body. As the communication lengthens, more layers may be used. The title’s purpose remains the same, as does the main body. But we may add middle layers, headers and subheaders (Doumont 2009d). These should not be generic. Instead, the author should be able to read just these and understand the gist of the communication. This concept is well established where we intend persuasive communication. A well-known instructor of legal writing, Guberman (2014) explains how to draft this middle layer:

Strong headings are like a good headline for a newspaper article: they give [the audience] the gist of what [they] need to know, draw [them] into text [they] might otherwise skip, and even allow … a splash of creativity and flair.

The old test is still the best. Could [the audience] skim your headings and subheadings and know why [they should act]?

A good way to provide these “signposts” is to make your headings complete thoughts that, if true, would push you toward the finish line.

In accord, Scalia and Garner (2008) writes:

Since clarity is the all-important objective, it helps to let the reader know in advance what topic you’re to discuss. Headings are most effective if they’re full sentences announcing not just the topic but your position on that topic.

In short, headings should be what Doumont (2009d) calls messages. Headings provide “effective redundancy.” The redundancy gained from headers may be two fold. They, first, introduce your message before the detailed paragraphs and, second, may be collected up front, as a table of contents. Even short communication benefit from headers, and communications of at least several pages will likely benefit from such a table of contents along with headers.

1.3.1.5 Story

At this point, we’ve identified a problem or opportunity upon which our entity may decide to act. We’ve found data and considered how we might uncover insights to inform decisions. We’ve scoped an analytics project. In beginning to write, we’ve considered document structure, sentence structure, and narrative. We’ve also begun to consider our audience.

Can we — should we — use story to help us communicate? Consider its use in the context of an academic debate on the question: (Krzywinski and Cairo 2013a), (Katz 2013), and (Krzywinski and Cairo 2013b); and for more perspective: (Gelman and Basbøll 2014).

Exercise 5 Explain whether, and why or why not, you believe any of the arguments in the debate about using story to explain scientific results are correct.

Let’s now focus on how we may directly employ story. A little research on story, though, reveals differences in use of the term. “"Story, n."” (2015) defines story generally as a narrative:

An oral or written narrative account of events that occurred or are believed to have occurred in the past…

Distinguished novelist E.M. Forster famously described story as “a series of events arranged in their time sequence.” Of note, he also compares and distinguishes story from plot: “plot is also a narrative of events, the emphasis falling on causality: ‘The king died and then the queen died’ is a story. But ‘the king died and then the queen died of grief’ is a plot. The time sequence is preserved, but the sense of causality overshadows it” (Forster 1927). But not just any narrative works for moving our audiences to act. Harari (2014) explains,

the difficulty lies not in telling the story, but in convincing everyone else to believe it. . .

Let’s consider other points of view.

To understand the narrative arc of successful stories, John Yorke studied numerous stories, and from those induced general principles Yorke (2015). A journalist and author, too, has studied narrative structure but, with a different approach — he focuses on the cognitive science and psychology of how our mind works and relates those characteristics to story (Storr 2020). Story, writes Storr, typically begins with “unexpected change”, the “opening of an an information gap”, or both. Humans naturally want to understand the change, or close that gap; it becomes their goal. Language of messages and information that close the gap, then, form what we may think of as narrative’s plot.

Indeed, Storr (2020) suggests that the varying so-called designs for plot structure9 are all really different approaches to describing change:

But I suspect that none of these plot designs is actually the ‘right’ one. Beyond the basic three acts of Western storytelling, the only plot fundamental is that there must be regular change, much of which should preferably be driven by the protagonist, who changes along with it. It’s change that obsesses brains. The challenge that storytellers face is creating a plot that has enough unexpected change to hold attention over the course of an entire novel or film. This isn’t easy. For me, these different plot designs represent different methods of solving that complex problem. Each one is a unique recipe for a plot that moves relentlessly forwards, builds in intrigue and tension and never stops changing.

A quantitative analysis of over 100,000 narratives suggests this too (Robinson 2017).

We evolved for recognizing change, and for cause and effect. Thus, a narrative or story is driven forward by linking together change after change as instances of cause and effect. Indeed, to create initial interest, we only need to foreshadow change.

Let’s consider some examples that use information gaps and narratives in the context of data science. The short narratives in Wainer (2016b), each about a data science concept that people frequently misunderstand, are exemplary. He begins each of these by setting up a contrast or information gap. In chapter 1, for example, the author teaches the “Rule of 72” as a heuristic to think about compounding quantities by posing a question10 to setup an information gap:

Great news! You have won a lottery and you can choose between one of two prizes. You can opt for either:

$10,000 every day for a month, or

One penny on the first day of the month, two on the second, four on the third, and continued doubling every day thereafter for the entire month.

Which option would you prefer?

Similarly, in chapter 2, Wainer (2016b) teaches us implications of the law of large numbers by exposing an information gap. Again, he uses a question to setup an information gap:

“Virtuosos becoming a dime a dozen,” exclaimed Anthony Tommasini, chief music critic of the New York Times in his column in the arts section of that newspaper on Sunday, August 14, 2011.

…

But why?

Once he has setup his narratives, he bridges the gap. Let’s keep in mind that his purpose in these stories are for audience awareness. To teach. We can adapt these narrative11 concepts, though, in communications for other purposes.

Exercise 6 By this discussion, are the Citi Bike and Dodgers memos a story? If not, what they may lack? If so, explain what structure or form makes them a story. Do the story elements — or would they if used — add persuasive effect?

1.3.1.6 Revise for the audience

“We write a first draft for ourselves; the drafts thereafter increasingly for the reader” (J. Williams and Colomb 1990). Revision lets us switch from writing to understand to writing to explain. Switching audience is critical, and not doing so is a common mistake. Schimel (2012) explains one manifestation of the error:

Using an opening that explains a widely held schema is a flaw common with inexperienced writers. Developing scholars are still learning the material and assimilating it into their schemas. It isn’t yet ingrained knowledge, and the process of laying out the information and arguments, step by step, is part of what ingrains it to form the schema. Many developing scholars, therefore, have a hard time jumping over this material by assuming that their readers take it for granted. Rather, they are collecting their own thoughts and putting them down. There is nothing wrong with explaining things for yourself in a first draft. Many authors aren’t sure where they are going when they start, and it is not until the second or third paragraph that they get into the meat of the story. If you do this, though, when you revise, figure out where the real story starts and delete everything before that.

Revision gives us opportunity to focus on our audience once we understand what we have learned. This benefit alone is worth revision.

But it does more, especially when we allow time to pass between revisions: “If you start your project early, you’ll have time to let your revised draft cool. What seems good one day often looks different the next” (Booth et al. 2016a). As you revise, read aloud. While normal conversations do not typically follow grammatically correct language, well-written communications should smoothly flow when read aloud. Try reading this sentence aloud, following the punctuation:

When we read prose, we hear it…it’s variable sound. It’s sound with — pauses. With emphasis. With, well, you know, a certain rhythm (Goodman 2008).

And when revising, consider each word and phrase, and test whether removing that word or phrase changes the context or meaning of the sentence for your audience. If not, remove it. In a similar manner, when choosing between two words with equally precise meaning, it is generally best to use the word with fewer syllables or that flows more naturally when read aloud.

Consider this 124-word blog post for what became a data-for-good project:

Improving Traffic Safety Through Video Analysis

Nearly 2,000 people die annually as a result of being involved in traffic-related accidents in Jakarta, Indonesia. The city government has invested resources in thousands of traffic cameras to help identify potential short-term (e.g. vendor carts in a hazardous location) and long-term (e.g. poorly engineered intersections) safety risks. However, manually analysing the available footage is an overwhelming task for the city’s Transportation Agency. In support of the Jakarta Smart City initiative, our team hopes to build a video-processing pipeline to extract structured information from raw traffic footage. This information can be integrated with collision, weather, and other data in order to build models which can help public officials quickly identify and assess traffic risks with the goal of reducing traffic-related fatalities and severe injuries.